In the rapidly evolving world of AI and natural language processing (NLP), adapting pre-trained models to specific tasks is essential for achieving optimal performance. Two popular strategies dominate this space: fine-tuning and prompt-tuning. Both aim to make a large language model (LLM) perform better on a particular task, but they approach it very differently. Understanding their strengths, limitations, and use cases is crucial for developers, data sciences, and AI enthusiasts.

What is Fine-tuning?

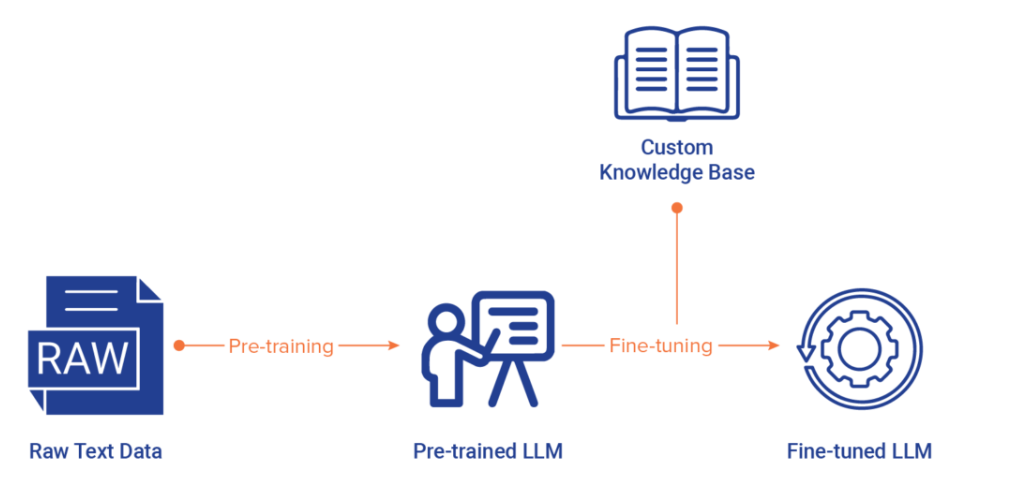

Fine-tuning involves updating the weights of a pre-trained model using task-specific data. Essentially, you are retraining the model on your dataset so that it becomes more specialized.

Advantages of Fine-tuning:

- High accuracy: Since the model's parameters are updated, it can achieve superior performance on the target task.

- Flexibility: Fine-tuning allows for adaptation across a wide range of tasks, from text classification to question answering.

- Task-specific knowledge: Fine-tuned models can better understand domain-specific terminology or nuances.

Disadvantages of Fine-tuning:

- Resource-intensive: Requires significant computational power and memory, especially for large models.

- Data requirement: Needs a substantial amount of labeled data to avoid overfitting.

- Model versioning complexity: Each fine-tuned model create a separate copy, increasing maintenance overhead.

Use case example:

Fine-tuning is ideal when you have a large, domain-specific dataset and require the highest possible accuracy. For instance, a legal document classifier trained on thousands of annotated contracts would benefit from fine-tuning.

What is Prompt-tuning?

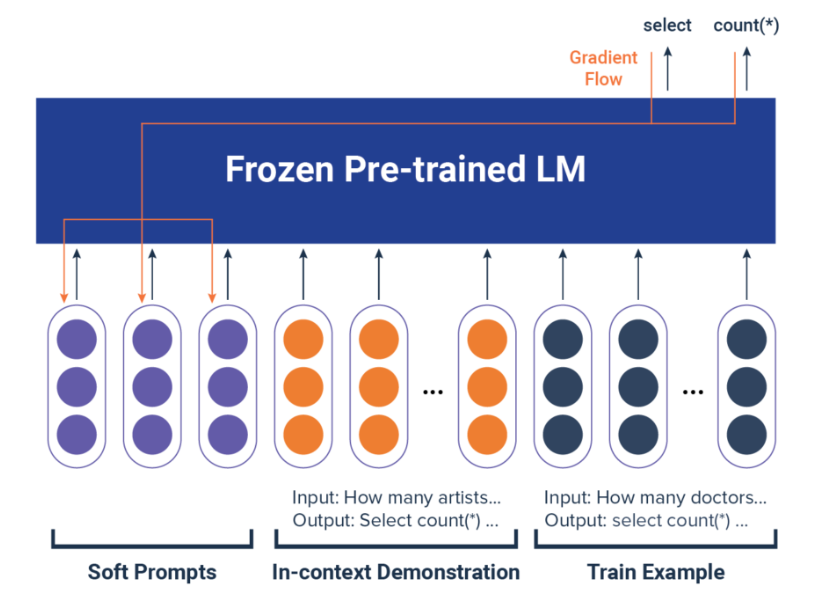

Prompt-tuning, on the other hand, does not change the underlying model weights. Instead, it finds an optimal "prompt" or input format that steers the pre-trained model toward the desired output. In essence, you teach the model to understand your task by carefully crafting the input.

Advantages of Prompt-tuning:

- Lightweight: Only the prompt or a small set of additional parameters is tuned, saving memory and computation.

- Fast iteration: Adjusting prompts can be quicker than full model retraining.

- Lower data requirement: Works reasonably well even with limited labeled examples.

Disadvantages of Prompt-tuning:

- Potentially lower accuracy: The performance may not match a fully fine-tuned model, especially on highly specialized tasks.

- Trial-and-error process: Designing effective prompts can be tricky and may require human intuition.

- Task limitations: May struggle with very complex or nuanced tasks that need deep domain knowledge.

Use case example:

Prompt-tuning is perfect for low-resource scenarios, like summarizing niche research papers with only a few examples or quickly adapting a model to a new chatbot persona without retraining.

When to Use Which?

| Criteria | Fine-tuning | Prompt-tuning |

|---|---|---|

| Data availability | Large labeled datasets | Few-shot or low-data scenarios |

| Compute resources | High | Low |

| Performance priority | Max accuracy | Acceptable accuracy with flexibility |

| Task complexity | Complex, domain-specific | Moderate or generic tasks |

| Maintenance | Harder to manage multiple versions | Easier, just update prompts |

- Use fine-tuning when accuracy is critial, you have enough labeled data, and you can afford the computational cost.

- Use prompt-tuning when you need agility, have limited data, or want to maintain, a single base model for multiple tasks.

Conclusion

Both fine-tuning and prompt-tuning are powerful tools for adapting LLMs, but they serve different needs. Fine-tuning is the heavyweight champion for specialized tasks requiring precision, while prompt-tuning is the agile sprinter, ideal for rapid adaptation with fewer resources. By understanding their trade-offs, you can choose the strategy that best aligns with your project goals and constraints.