This guide explains how to set up a local AI chatbot using Ollama, an open-source platform that lets you run language models on your own computer. Unlike online chatbots, a local setup offers unique features like privacy and offline use. Below, we’ll walk through each step with straightforward instructions suitable for beginners.

1. Why consider a local chatbot?

Running a chatbot locally, such as with Ollama, offers several advantages:

- Privacy: Your data stays on your device, avoiding external servers.

- Offline Use: It works without an internet connection, ideal for remote or disconnected settings.

- Customization: You can adjust the model to meet specific needs or preferences.

- Speed: Responses can be quicker, depending on your hardware, without network delays.

- Learning: It’s a hands-on way to explore AI technology.

That said, online chatbots offer benefits like instant access and frequent updates. Choosing between the two depends on your goals and circumstances.

2. What is Ollama?

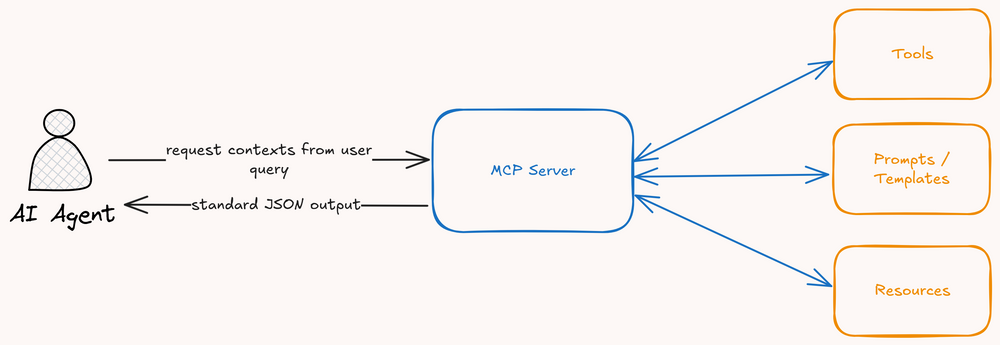

Ollama is an open-source tool that allows you to operate large language models (LLMs) directly on your device. These models power AI chatbots, enabling them to process and generate text. By running Ollama locally, you gain the ability to use advanced AI capabilities without relying on external servers or internet connectivity.

Why Ollama ?

- Lightweight and Efficient: Designed to run on local machines without heavy resource requirements.

- Customizable: Can be fine-tuned for specific use cases.

- Open and Transparent: Users have full control over the model and data.

3. Installation

Here’s how to get your local chatbot up and running. We’ll cover installing Ollama, adding a model, and using it, with an optional graphical interface.

3.1 Installing Ollama

First, you need Ollama, the core platform for running local models.

- MacOS: Go to ollama.com, download the installer, and follow the prompts to install.

- Linux: Open a terminal and enter:

curl https://ollama.ai/install.sh | sh

This command downloads and sets up Ollama.

- Windows: As of late 2023, Windows support is still being developed. Check for updates or try LM Studio as an alternative.

To confirm it’s working, open your browser and visit http://localhost:11434. If a page loads, Ollama is ready.

3.2 Adding a language model

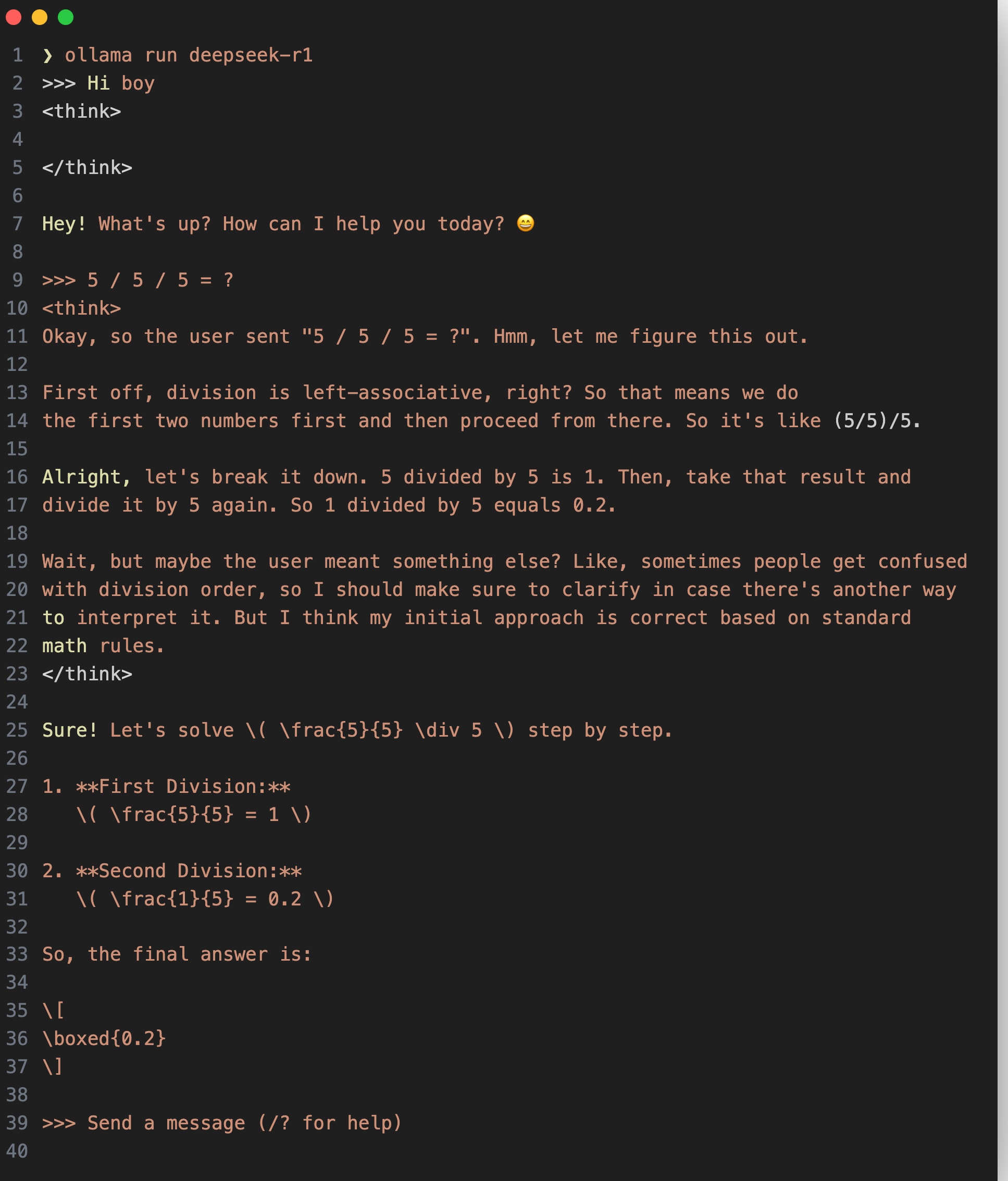

Ollama needs a language model to function as a chatbot. You can choose one from Ollama’s model library. For this guide, we’ll use deepseek-r1, but if it’s unavailable, options like mistral or llama2 work too.

How to install:

- Open a terminal.

- Type and run:

ollama pull deepseek-r1 - Wait for the download to finish—models can be large, often a few gigabytes.

Once complete, the model is installed and ready.

3.3 Interacting via command line

You can chat with your bot using Ollama’s command-line interface (CLI).

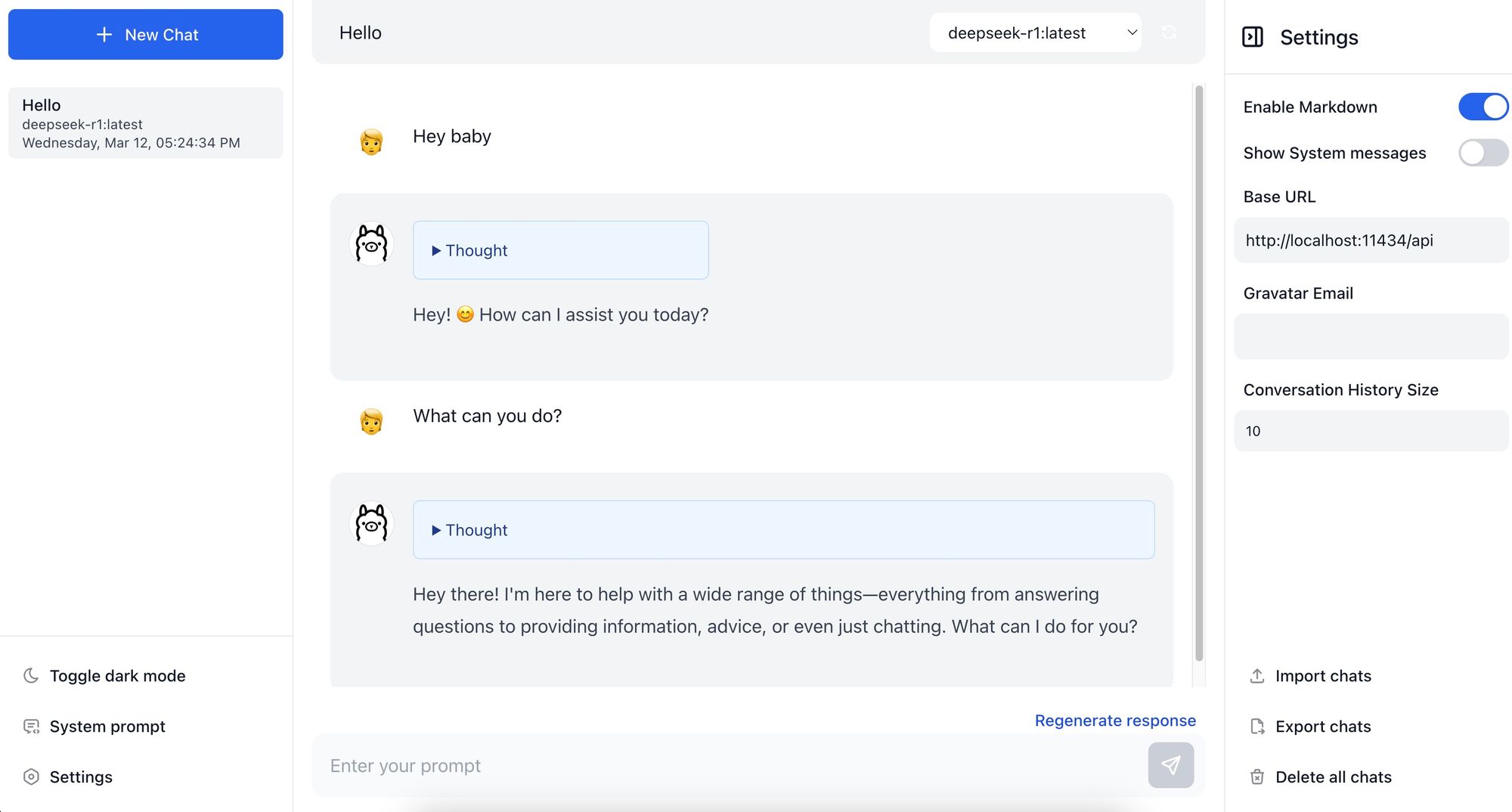

3.4 Adding a graphical interface (Optional)

If you’d rather avoid the terminal, a graphical user interface (GUI) is available from HelgeSverre/ollama-gui.

How to set it up:

Visit the GitHub page HelgeSverre/ollama-gui. Follow the README steps, which usually involve cloning the repository and running setup commands.

#### Prerequisites (only needed for local development)

-----

Install Node.js v16+ (base on https://github.com/HelgeSverre/ollama-gui/readme.md)

and Yarn

### Local Development

-----

git clone https://github.com/HelgeSverre/ollama-gui.git

cd ollama-gui

yarn install

yarn dev

Launch the GUI for a more visual way to chat.

This is optional—stick with the CLI if it suits you.

4. Conclusion

You’ve now created a local AI chatbot with Ollama. Here’s a summary of its strengths and challenges:

Pros:

- Keeps your data private on your device.

- Works offline, anywhere.

- Allows tailoring to your needs.

- No subscription costs after setup.

- Can be fast with good hardware.

- Offers a chance to learn about AI.

Cons:

- Needs decent hardware (e.g., ample RAM or a GPU) for larger models.

- Models don’t update automatically like online versions.

- Setup takes more effort than online alternatives.

To improve performance, consider a strong GPU. You might also browse Ollama’s library for new models or try customizing one for specific tasks.