In the rapidly evolving landscape of Large Language Models (LLMs), the ability to establish dependable communication between models and external systems has become a critical necessity. The Model Context Protocol (MCP) has emerged as a pivotal standard designed to define how AI agents obtain, exchange, and act upon contextual data. This article explores the fundamentals of MCP, its significance in the AI ecosystem, and how it is reshaping the future of multi-agent collaboration and system integration.

What Is MCP?

Model Context Protocol is a standardized, transport-agnostic way for AI agents to:

- Discover available tools, data sources, and capabilities

- Request structured context

- Invoke actions safely

- Stream results and intermediate state

It reduces ad‑hoc plugin contracts and replaces brittle prompt stuffing with explicit, typed exchanges.

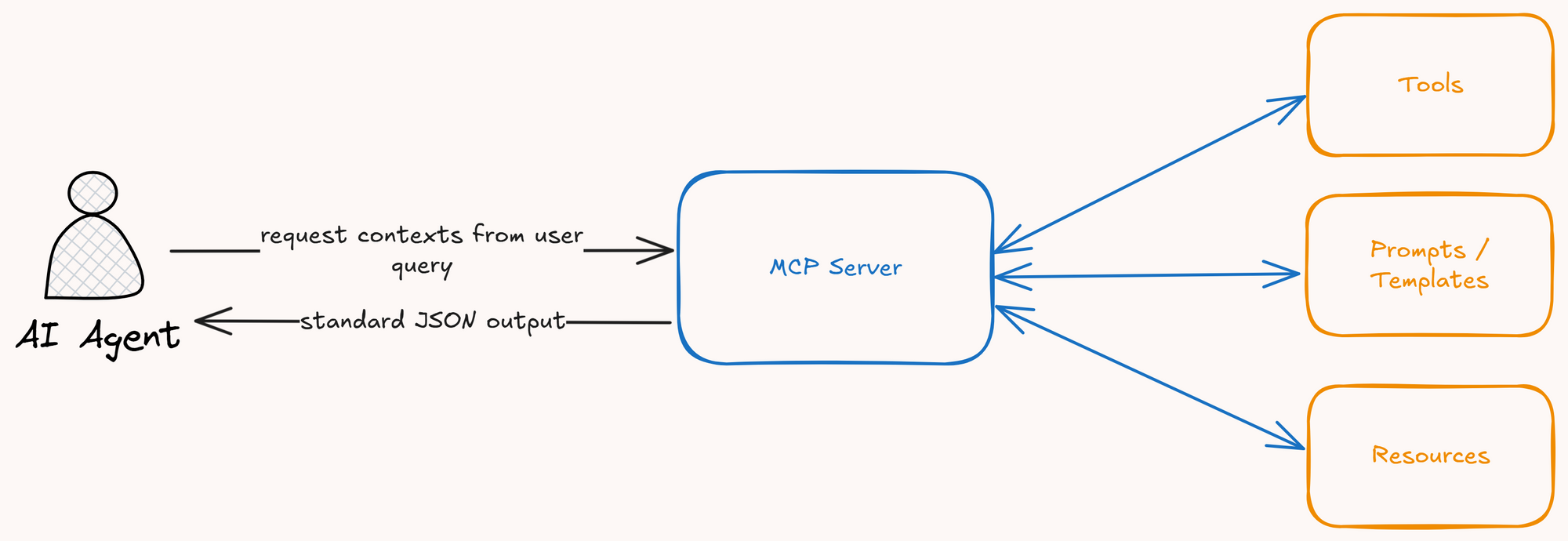

Core Concepts

- Resources: Read-only contextual objects (files, configs, embeddings).

- Tools: Executable operations with declared input/output schemas.

- Prompts / Templates: Reusable contextual bundles served to models.

- Permissions: Fine-grained gating of tool/resource exposure.

High-Level Flow

- Client (LLM host) connects to MCP server.

- Capability negotiation (tools, resources, prompts).

- Model asks for context (resource listings, prompt retrieval).

- Model invokes tool with structured arguments.

- Server streams progress events.

- Result packaged and appended to conversation context.

Example Interaction

{

"type": "tool.invoke",

"tool": "searchLogs",

"arguments": { "query": "timeout error", "limit": 25 },

"requestId": "abc123"

}{

"type": "tool.result",

"requestId": "abc123",

"status": "ok",

"data": [

{ "timestamp": "...", "line": "TimeoutError: connection lost" }

]

}Common Use Cases

a. Software Development

Developers can use MCP-enabled AI agents to access code repositories, run tests, and deploy applications—all through natural language commands.

b. Data Analysis

Analysts can leverage AI agents that connect to multiple data sources, perform complex queries, and generate insights without writing code.

c. Customer Support

Support systems can use MCP to give AI agents access to knowledge bases, ticket systems, and customer data for more effective problem resolution.

Challenges

a. Versioning of Tool Schemas

When tools evolve (new params, renamed fields, removed outputs), older clients may break or misinterpret results. Silent drift causes hallucinated usage or malformed invocations. Mixing schema versions inside one session amplifies ambiguity (e.g., cached prompt refers to deprecated argument). Without explicit semantic version tags and negotiation, rollback is painful.

b. Latency for Large Resource Hydration

Fetching large codebases, documents, or vector batches can stall the agent’s reasoning loop, leading to timeouts or premature reasoning with incomplete context. Streaming helps, but naive full hydration wastes bandwidth and token budget.

c. Secure Sandboxing of Side-Effect Tools

Tools that mutate state (deploy, write file, trigger pipeline) risk misuse if the model misinterprets instructions or an adversarial prompt causes unintended actions. Overly broad shell or network access turns an MCP server into a lateral movement surface.

d. Coordinating Multiple Concurrent Sessions

Several agents (planner, executor, reviewer) may compete for tool access, creating race conditions (one agent reads stale config another just changed). Without concurrency control, interleaved streams confuse attribution and degrade reasoning quality.

Best Practices

- Keep tools granular and composable.

- Provide lightweight summaries for large resources before full fetch.

- Use streaming for long-running tasks.

- Separate read vs. write capabilities explicitly.

- Log every invocation with correlation IDs.

Conclusion

MCP formalizes how AI agents discover, request, and act on context, enabling secure, interoperable, and reliable integrations. Adopting it early positions teams for multi-agent collaboration and easier backend evolution. Start small: define one resource, one tool, validate the schema, then expand.

Reference documents