Tiny AI refers to lightweight and efficient AI models that significantly reduce computational requirements, often by a factor of 10x, while still performing tasks that traditionally require large datasets and powerful hardware.

In this article, we explore how to Tiny AI models compact yet powerful systems reshaping the future of artificial intelligence. we’ll explore:

- Why Tiny models matter? explore the significance of Tiny AI models and the advantages they offer compared to traditional large-scale models.

- Popular Tiny AI architectures: show top AI tools that help architects and designers to explain how to develop and optimize Tiny AI models for various use cases.

- Applications of Tiny AI is being applied into multiple fields including healthcare, agriculture, IoT, …

- Challenges and limitations: understand the key barriers facing Tiny AI models, and explore strategies developers can use to overcome them.

- Future trends: explore the evolving landscape of Tiny AI

Why Tiny Models Matter

While large AI models continue to grow and push the boundaries of performance, their limitation lies in the difficulty of deploying them at scale due to the need for expensive infrastructure.

In contrast, tiny models can run directly on devices such as mobile phones, IoT sensors, and wearables. They offer several advantages over large models, including real-time responsiveness, enhanced data privacy, and energy efficiency with a lower carbon footprint.

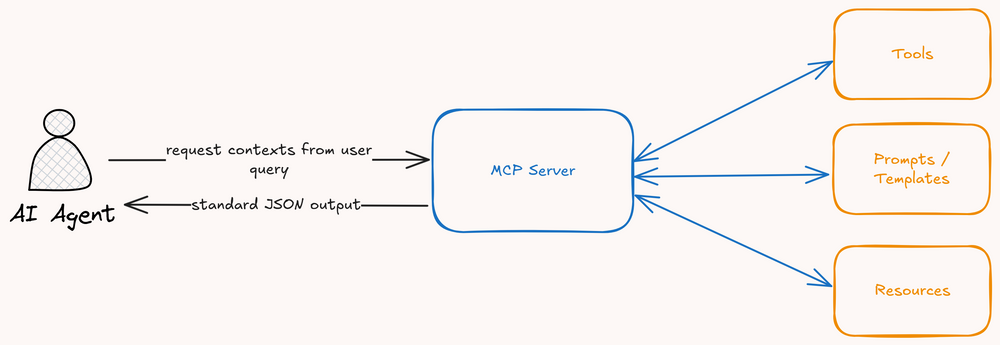

Furthermore, in the recent paper Small Language Models are the Future of Agentic AI from NVIDIA Research, they mention that SLMs are sufficiently powerful for many tasks within agentic systems.

Popular Tiny AI Architectures

Tiny AI models strike a sharp balance between accuracy and efficiency.

In computer vision, lightweight CNNs such as MobileNet, EfficientNet-Lite, and ShuffleNet are widely adopted. These models are specifically engineered to reduce the number of parameters and floating-point operations (FLOPs), enabling real-time inference on mobile and edge devices.

For NLP, transformer-based models like DistilBERT, TinyBERT, and ALBERT reduce the number of parameters by up to 70%, while still retaining competitive accuracy on downstream tasks.

Applications of Tiny AI

Multiple fields will be impacted by Tiny AI models with some keys:

Healthcare: Tiny AI enables low-power, real-time health monitoring and supports early diagnosis of critical conditions like cancer and stroke, even in resource-constrained environments.

- Tiny Data: data is often scarce.

- Tiny Hardware: edge devices such as wearable, IoT sensor, …

- Tiny Algorithms: lightweight models like MobileNet, DistilBERT, Meerkat, …

Agriculture: Tiny AI enables real-time crop monitoring, disease detection, and precision farming directly on low-power devices, marking smarts agriculture more sustainable and accessible for farmers in resource limited setting

Smart Homes: Tiny AI models are increasingly deployed in smart homes to enable real-time, private, and energy efficient automation.

- Voice Assistants: These can operate offline, ensuring faster responses and enhanced privacy by keeping data local.

- Security Cameras: Capable of detecting motion, recognizing individuals, and distinguishing between pets and intruders—all without sending data to the cloud.

- Energy Optimization: Tiny AI helps adjust lighting, heating, and appliances based on user behavior, contributing to smarter and greener living environments.

Challenges and Limitations

Although tiny models are growing, they still face notable challenges that limit their widespread adoption.

- Accuracy and Efficiency: Tiny models sacrifice some accuracy when compressed for efficiency.

- Limited Generalization: The perform well in narrow tasks but struggle in broader or unseen contexts.

- Training Complexity: Designing and distilling small models still requires significant expertise and resources.

- Deployment Constraints: Hardware variation makes edge deployment and optimization challenging.

- Ecosystem Maturity: Tooling, benchmarks, and best practices for tiny models remain les developed.

Future Trends

Tiny models will represent the future of accessible, sustainable, and domain-adapted AI.

- Hybrid AI pattern: In 2024, IBM Research that Small Language Models (LLMs) not only reduce infrastructure costs but also improve efficiency on Bigger isn’t always better: How hybrid AI pattern enables smaller language models

- Real-time edge AI: In recent years, smartphones and wearables have grown rapidly, and hardware is no longer the main barrier. This shift reduces dependency on cloud computing and contributes to a greener, more sustainable AI ecosystem.

- Domain-Specific SLMs: In 2025, a Nature Digital Medicine article highlighted the rise of SLMs build for domain specific application.

Conclusion

Tiny AI models not only lower costs and energy consumption, but also democratize access to AI—making advanced technology practical across fields like healthcare, smartphones, and agriculture, … where real-time, private, and sustainable is essential in everyday applications.